Spacemesh: The 1:N Guide

This has been one of the more difficult guides to write. Mostly because I did not need to merge anything from my 1:1 setup. A series of unfortunate events resulted in missing the cycle gap completely, and basically just starting over with fresh state on 1:N. This guide will cover the theory of merging identities (basically taking your 1:1 setup and making it a 1:N without losing rewards) but I don’t actually have a node to show it.

Important bits before we get started

You should consult the official documentation if anything does not make sense.

I am not responsible if something goes wrong with your setup because of my guide. I try my best to research and actually perform the steps but it’s not always possible.

It’s best to give this a read through first, and then go through and follow the steps.

You should back up your key files if applicable (key.bin, nipost, postdata_metadata.json, etc..) before doing big changes. And honest you should do it periodically anyways.

The latest files references can be found on my github

If using Team24, make sure to get the latest PoETs and pubkeys from the Team24 site

I reference new nodes and old nodes. New nodes in this guide means the new nodes you will be setting up to run 1:N. Old nodes are the old nodes you were using before 1:N.

The nodes you are using for 1:N can only be used as a node - you should not try and generate any PoST data with them.

You can start by migrating just one old node to make sure it works and get familiar with the steps. This is part of why it’s a good idea to just set up a fresh node for your 1:N setup. Then you can merge 1 old node to your new node and make sure it all works. Then go back and migrate others.

The final product will look something like this:

Preparation

All of your nodes should be on version 1.4.X before you attempt to merge them to a 1:N setup. This is because there are some big changes to how state is handled in 1.4.X which requires the node being merged to be at least on version 1.4.X. My suggestion is to use the latest version available.

I also recommend setting up a completely new node to merge you old nodes into - instead of trying to convert an existing node. It’s just easier and cleaner. I also recommend using standard naming conventions and folder structure so that it is less confusing when working with your config and stack files.

My folder structure looks like this:

I have a base folder called spacemesh. Inside that I have a folder for each node I will run. Because of how nodes are set up you need a node for each set of PoET servers you will be using. So in the image I just have early_team24, but if you want to run dual PoETs you would need to add another folder for late_team24, etc..

Within the node folder, there is a node_data folder and a config.json. Historically I’ve seen the config named config.mainnet.json but that just seems repetitive and pointless, so I use config.json now. Previously I had the node_data folder called sm_data, but the new official guides reference it as node_data so since I set up an entirely new node I followed this convention.

Inside the node_data folder, all of these things are generated automatically when the node is first started, except the keys inside the identities folder, you will add those later in the merge process.

So to recap, you only need to manually create the spacemesh, early_team24 (or whatever your PoET servers are), node_data and config.json. The rest will be created automatically when we first start our node.

Setting up Your Primary Node

You will need to do this step for each new node you will run. Yes, it’s called 1:N but really the 1 is however many PoET server groups you are connecting to. I use both early and late Team24 PoETs, so I set up two nodes (2:N). In all of my examples and files, it will show two nodes, however you can adjust it depending on your use case. The main point is that you need a node for each set of PoET servers.

I will briefly cover setting up a new node:

Make sure Linux is fully updated

sudo apt update && sudo apt upgrade -yThen create your spacemesh folder in your home directory

mkdir ~/spacemeshNow create a folder for each node

mkdir ~/spacemesh/early_team24mkdir ~/spacemesh/late_team24Then create the node_data folder in each node (only shown for early_team24):

mkdir ~/spacemesh/early_team24/node_dataThen create the config.json

nano ~/spacemesh/early_team24/config.jsonNow copy and paste the contents of the config you want to run

Github Link: Team24, Early, 1:N

Github Link: Team24, Late, 1:N

Github Link: Team24, Default, 1:N

In my configs I follow a standard convention for my ports. As you can see, the Early T24 uses ports 6001, 9192, 9193, 9194, 10001. Then Late uses 6002, 9292, 9293, 9294, 10002. Following this convention, if I wanted to also run the default 12 hour, I’d create another config and set it up to run ports 6003, 9392, 9393, 9394, 10003. The reason I do this is that if I ever needed to access these ports outside of my server, I can do so by forwarding them in docker. Ports being forwarded must be unique for the host.

Github Link: 1N Node

With the config and stack files set up, it’s safe to start the node and let it automatically create a bunch of the needed files and folders. You will want to start the node and let it completely start up - this means the GRPC servers should be running and it will want to start syncing. You can confirm this by just running any of the grpcurl commands. From here you have two options, you can either let it start syncing manually which could take a day or two, or you can use the new quicksync option.

If you want to manually quicksync, I suggest doing it this way:

First, stop the node. Then delete the following files from the node_data folder:

state.sql

state.sql-shm

state.sql-wal

Create a folder for the quicksync files:

mkdir ~/quicksync && cd ~/quicksyncDownload the latest zst file

wget http://quicksync.spacemesh.network/latest.zstThen extract it

zstd -d --memory=2048MB latest.zstNow copy in the file we just extracted, renaming it to state.sql

copy latest.zst ~/spacemesh/early_team24/state.sqlYou can replace the path with wherever your node_data folder is, and copy the state.sql into each node’s folder. Make sure the node is off when you do this!!

The file is pretty big (30GB or so) and it might take a bit to extract and transfer.

Whether you did the quicksync or let it sync manually, the node should be off for the next steps as well. You need to delete the local.key for each of the new nodes you are setting up.

rm ~/spacemesh/early_team24/node_data/identities/local.keyThis is essential to running 1:N. In the next step “Merging” you will be moving the key for each set of PoST data into this identities folder, and deleting the local.key tells go-spacemesh you want to run in unsupervised mode.

Merging

This is without a doubt the hardest part. Under ideal conditions, you would have been running a go-spacemesh node on 1.3.X without a PoST service, and you have now upgraded that node to 1.4.X and have shut it down. In this most simple use-case, you can easily run the merge tool and get everything moved over. To do this, for every go-spacemesh node you were previously running (old nodes), go into the identities folder and rename the local.key (not delete like we did above - you will rename it) to something unique.

When you upgraded to 1.4.X, your key.bin was moved to the identities folder and renamed local.key. For my use case, I name the local.key the same as the PoST data folder it is connected to. So if my PoST data is located at /media/spacemesh/post01 then the local.key associated with the go spacemesh instance it was connected to would be called post01.key. In this first step, we are preparing to have that key moved by the merge tool to the new node you set up, this is why we must rename it first.

I prefer completely merge nodes one at a time, and the steps should look something like this:

Make sure you have updated the old node to 1.4.X

Make sure you have renamed the local.key for your old node to something unique

If you are running docker you probably don’t have go-spacemesh. To run the merge tool you will need to download it. To do this, perform the following:

Install dependencies

sudo apt install libpocl2 unzip -ywget https://storage.googleapis.com/go-spacemesh-release-builds/v1.4.4/go-spacemesh-v1.4.4-linux-amd64.zipUnzip

unzip go-spacemesh-v1.4.4-linux-amd64.zipMove into folder

cd go-spacemesh-v1.4.4-linux-amd64Inside here there are a few files, including the merge-nodes tool. The command is quite simple:

./merge-nodes --from <source_path> --to <target_path>The ‘from’ would be the old node you are migrating, and the to would be the new node you just set up. If you are running multiple PoET servers make sure to merge the old node to the correct new node. You should also be specifying the node_data folder. So for example:

./merge-nodes --from ~/spacemesh/old_node/node_data --to ~/spacemesh/early_team24/node_dataOnce the merge is done, you should see the key from the old node inside the ‘identities’ folder of the new node. Repeat this, making sure you are merging the old node to the correct new node that has the PoET servers you want to use for it.

For those with more complicated set ups, where you were using remote post with a post_service on a separate host, you may need to move things manually. Or if you need to merge old nodes to a new server that has your new nodes, you can move just the folders/files you need onto a USB drive and complete the migration.

The steps to manually merge are laid out pretty well in the official docs.

Once your old nodes have been merged to your new node, you can start up the new nodes. When you start them up you should see in the logs that it finds all of the keys you added to the identities folder.

PoST Service

By now you should have a new node running on 1.4.X with all the keys for the PoST data in the identities folder. The new nodes should also be fully synced. Because the PoST services run independent of the nodes, you can safely start and stop them while leaving the node running.

In my setup, all my drives are connected directly to the server I will be running both my nodes and my PoST services. If your node is remote, you may need to modify some things in this guide.

Also, for my set up, all of my PoST data is located at /media/spacemesh - with a subfolder for each PoST data. For example:

/media/spacemesh/node011You will need to bind mount that location for each PoST service you run. You will need to run a dedicated PoST service for each set of PoST data. However, the PoST service uses up almost no resources outside of the cycle gap. You can also safely stop all of your PoST services outside of the cycle gap (just make sure to remember to turn them back on!).

Also, because our node config no longer contains our threads/nonces, we will specify them in our PoST service stack file. The PoST service does not have a config, everything will be in our command. My recommendation is to just set up one PoST service and then make sure it’s working, then add them all.

Start by creating a new stack file in Portainer.

Github: Team24 Early PoST Service

Github: Team24 Late PoST Service

A few things to note:

Make sure under volumes you are pointing to your PoST data.

The ‘- -address’ should be the IP of the container that has the node you want to connect it to + the applicable port.

Set threads and nonces to whatever you want, the values in the stack file are just examples.

The ‘--operator-address’ allows us to query the state of our PoST services.

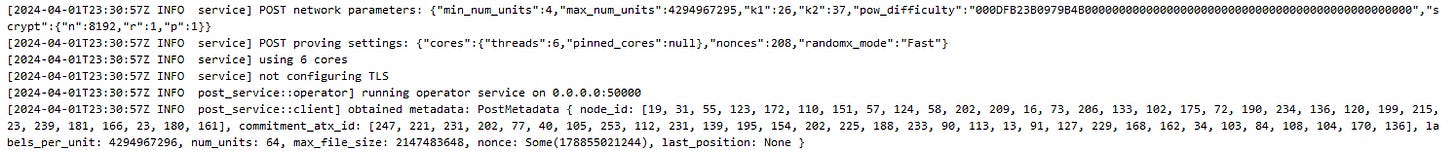

Once you have the stack file set up, deploy it. You should see something like:

And then you should see on your node that a PoST service was registered.

That’s it. After you get your PoST service working for each of your PoST data, everything should be connecting and working. There are a few things you can do to make sure everything is in a good state.

The first thing I usually do is check the PostState.

grpcurl --plaintext 172.18.0.101:9194 spacemesh.v1.PostInfoService.PostStatesYou should get a response like:

{

"states": [

{

"id": "gIuNdWb5u2OBREiaiCpkDC5sDEM+pJlq6rNTBSR99C0=",

"state": "IDLE",

"name": "node131.key"

},

{

"id": "oZuvLE+zGDJCHC5vW1BC07w3KOcbhuTZ8VUv5wgAYS8=",

"state": "IDLE",

"name": "node151.key"

}

]

}For each PoST service you should get a state, along with the key that you renamed earlier in the process.

You can also check your event stream

grpcurl -plaintext -d '' 172.18.0.101:9193 spacemesh.v1.AdminService.EventsStreamHere you should see some familiar states. I don’t think these are really all that helpful most of the time - but if you are earning rewards for your nodes then you should see your layers here like usual. That is a good indication everything is good.